Most modern, mid- to large-sized organizations running SAP employ either SAP Business Warehouse (SAP BW) or SAP BW/4HANA systems for business analytics.

These systems load transactional data containing vast amounts of master data from many different types of source systems.

During the transactional data load process, a prerequisite exists within the system to validate that all master data within the transactional records exists in their respective InfoObjects. If the master data is not found, it is automatically created within its respective InfoObject.

Many organizations have governance policies governing master data and prohibit the creation of master data on the fly for a variety of reasons. For example, master data and its attributes are used in further business logic, or master data is consumed in upstream applications (SAP Business Planning and Consolidation, SAP Power BI, etc.).

This blog post explores a creative solution to address this challenge by utilizing a combination of existing SAP BW toolsets and a bit of customization.

The Challenge

The main challenge is to explore and identify a solution which meets the following criteria:

- Stops creation of master data on the fly while loading business transactional data into BW InfoProviders.

- Allows loading transactional records into the BW system for an existing master data set.

- Analyzes and finds erroneous master data within the transactional data that is being loaded, and then sends notifications to a dynamic email distribution list with a summary of missing master data for necessary action.

- Allows flexibility to reprocess erroneous records after the master data has been reviewed and corrected.

- Ensures the integrity of transactional and master data set.

Solution

The criteria listed above present many challenges, which can be addressed by many different solutions. After research and meticulous planning, we’ve created a comprehensive solution, described below.

Assumptions:

- We’ll be using an email service, and, in our case, it was configured by Basis administrators on an SAP BW system and is currently active.

- We’ll utilize a custom ABAP program that is executed after the main data transfer process (DTP) processes in the Process Chain.

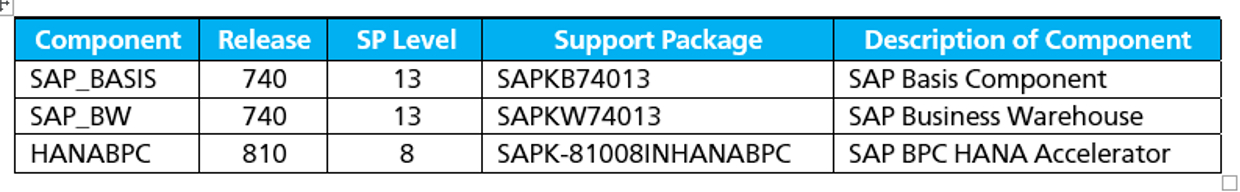

- This solution has been developed and deployed on an SAP BW system with the specifications outlined below, but is also applicable to SAP BW/4HANA systems as well.

Base DTP Configuration

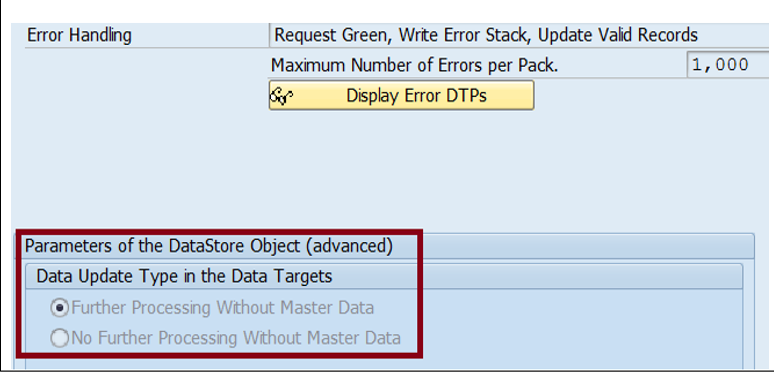

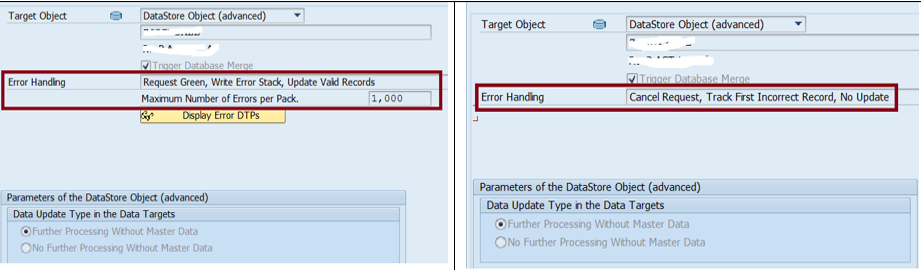

The “update” tab of the DTP configuration provides the following settings for advanced DataStore Objects (advanced DSOs)

- Further processing without master data

- No further processing without master data

The first option allows for loading transactional data without master data being available in its respective InfoObjects. The second option ensures the presence of the requisite master data in InfoObjects used by advanced DSOs (or other InfoProviders) and will not process transaction data into targets.

The challenge with this configuration check is it reverts to “all” or “none,” meaning the setting is applicable to either master data for all of the InfoObjects (first figure below) or none (second figure).

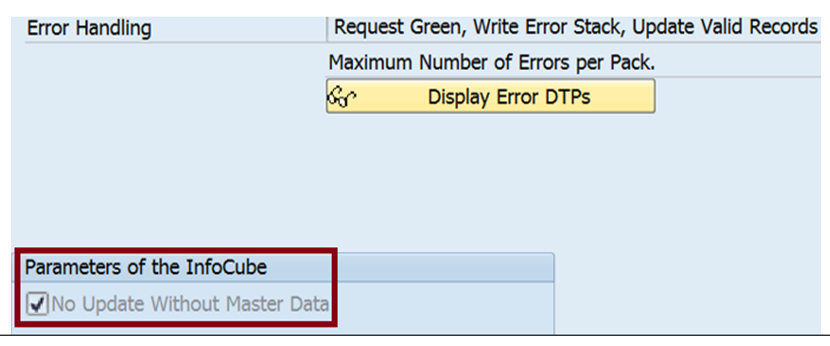

Note: similar configuration settings are available in the “update” tab of the DTP for InfoCubes.

For simplicity (and this is the most widely used scenario), our solution focuses on advanced DSOs only as the target for the first option listed above, “further processing without master data.” This setting will allow all transaction data to be loaded even if master data is not available in constituent InfoObjects, and the referential integrity concept is used to prevent the creation of master data on the fly. It, however, has a limitation—delayed availability of erroneous transaction data in target InfoProviders until master data challenges are resolved.

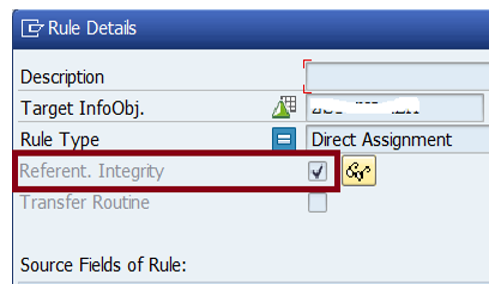

Transformation Setting: Referential Integrity

SAP BW transformation configuration provides a standard functionality for ensuring referential integrity at field-level mapping. It can be turned on by adding a checkmark as shown in the figure below. This checkmark ensures that the master data value contained within the transactional data is present in the InfoObject used in the target InfoProvider. If this check fails, the following will happen depending on the DTP configuration settings:

- DTP fails altogether: for option ”no further processing without master data.”

- Erroneous transaction is recorded into an error DTP (or error stack): for option “further processing without master data.”

For the referential integrity check to work, the DTP option (under the “update” tab) should be set to ‘further processing without master data’ for advanced DSOs. The other option (“no further processing without master data”) overrides the referential integrity check.

DTP Configuration: Error Handling

The configuration settings covered in the two prior steps will force the DTP to error out when referential integrity fails. The DTP failure must be handled by an additional configuration to ensure a successful load into its targets. DTP configuration offers error handling as standard configuration:

- DTP settings

- Error handling = “Request green, write error stack, update valid records”

- Max errors = 1000 (or another reasonable number)

- Error DTP settings

- Error handling = “cancel request, track first incorrect record, no update”

With the above settings, if the referential integrity check fails, erroneous transactional records get recorded into the Error Stack. The error messages get recorded in an error message log, and valid transactional data gets loaded into target InfoProvider.

Error Stack Analysis

The Error Stack is a transparent table within the SAP system. It has the same structure as its data source. The table stores transaction data temporarily until it gets processed to its target or is manually deleted from table. Below are the main steps of performing an Error Stack analysis.

- A custom ABAP solution should be developed to analyze the Error Stack, using two input parameters:

- P_DTP: DTP technical ID for which transaction data load errors need to be analyzed.

- P_DEL: A flag (Y/N) to indicate if the Error Stack (and messages) should be cleared or purged.

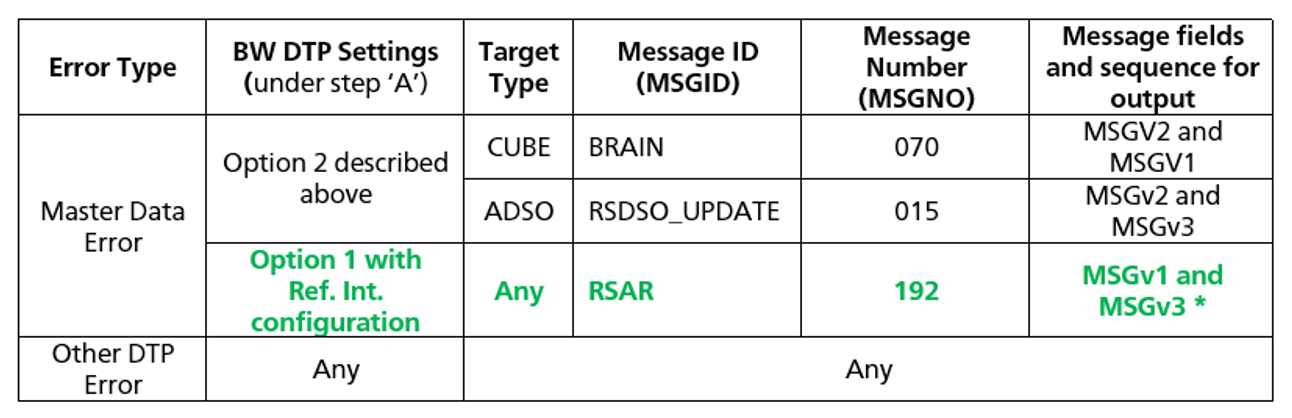

- Use the SAP table RSBKREQUEST (“DTP Request”) to find the latest request ID (REQUID) and target type (TGTTP) for incoming DTP IDs (i.e. RSBKREQUEST/DTP = P_DTP).

- Use the SAP table RSBERRORLOG (“Logs for Incorrect Records”) with the request ID (REQUID) found in the previous step, and apply the following logic to capture errors messages:

- If DTP processing was completed successfully, the table RSBERRORLOG will not have any entry for the latest request ID (REQUID), and hence no further action is needed.

- If the table RSBERRORLOG has entries for the request IDs, that means there are some transactions which have failed during the data load process. All these error messages are captured in an internal table, parsed, and analyzed based on the logic in the table below.

*This blog post focuses on solution for this configuration.

-

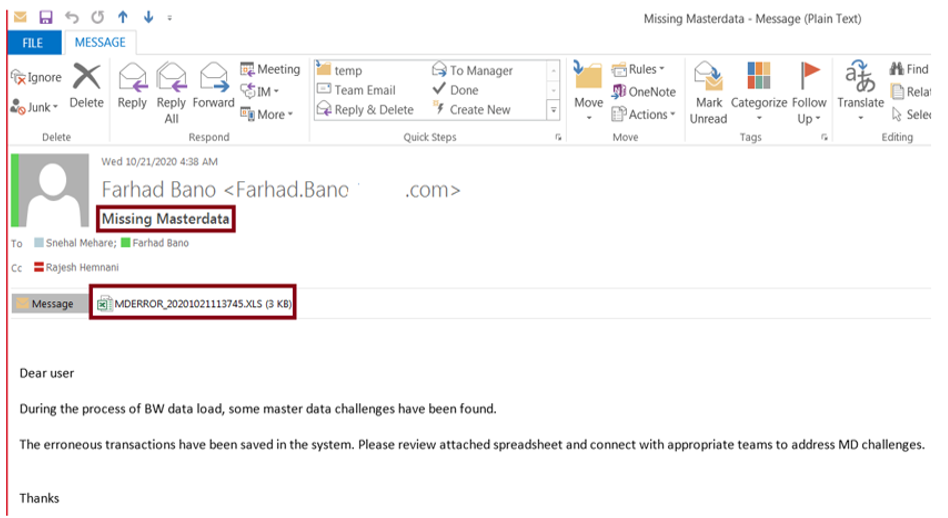

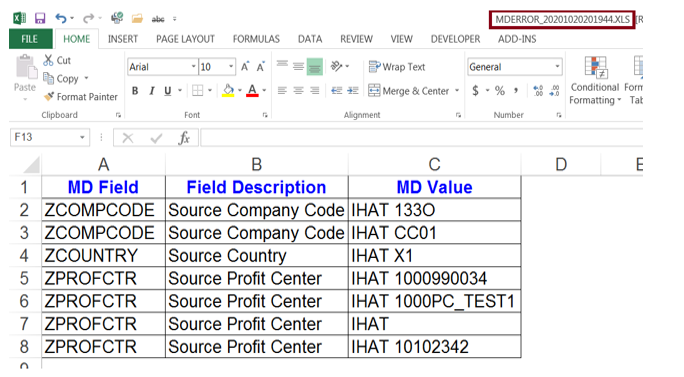

- All error messages are then encapsulated in a spreadsheet and sent as an attachment via email to provide detailed information to recipients. Email messages are sent using SAP standard FM SO_DOCUMENT_SEND_API1 with email DLs that are maintained in a custom table.

- Optionally, error messages and the Error Stack could be purged depending on the solution (and assuming error DTP is executed before main DTP), for example:

- Full load DTP: Error Stack/error messages should be cleared as the next execution will bring in the same data set. Without clearing the Error Stack, it may double up the data in the target.

- Delta load DTP: Error Stack/error messages should not be cleared as the next DTP execution will not bring in the same data set. If the Error Stack is cleared, some business transactions will not make it to the target. Until master data challenges are resolved, the next data load may result in the same set of errors, causing an additional data duplicity error in the Error Stack—called serialization error.

- If P_DEL = Y, then purge all entries from the following:

- RSBERRORLOG for latest request ID (REQUID): purges all error messages.

- Error Stack of incoming DTP (P_DTP): purges erroneous source transactions. Find the Error Stack name (field - ODSNAME_TECH) from table RSTSODS (ODS for the transfer structure) for incoming DTP, i.e. USEROBJ = P_DTP.

Results

The solution we have created uses configurations in the BW Process Chain that executes the error DTP, regular DTP, and an ABAP program with two parameters. When the process chain is executed, the following occurs:

- The error DTP processes all erroneous transactions to a target InfoProvider (assuming all master data issues have been resolved).

- The regular DTP processes all new business transactions to a target InfoProvider.

- The ABAP program performs analysis of master data (among other) errors, encapsulates all master data errors in a spreadsheet, and sends an email to relevant parties. A sample email message and sample spreadsheet (with details) are depicted in the figures below.

- If P_DEL = Y (for full DTP loads),

- All error messages (from the message log) and erroneous source transactions (from the Error Stack) are purged to avoid the doubling of data in the next run of the process chain.

Conclusion

Typically, SAP BW, SAP BW/4HANA, and the transactional data load process involves the extraction of data from the source system, transformation, and load into InfoProviders. While performing the data load, it is a pre-requisite to have master data available in relevant InfoObjects and the master data set is created if it doesn’t exist.

When organizational policies around master data doesn’t allow the creation of master data on the fly, different solutions have to be adopted to address the challenges. In this post, a user-friendly solution was presented by utilizing available toolsets in a creative manner, with a bit of customization.

Comments