Process integration is about building distributed business processes. The exchanged messages typically connect steps of a business process across different systems or tenants and then trigger the next process step on the receiving side.

Creating a sales order, for example, may trigger several sales-related process steps in the receiving system, such as delivery and billing. For data integration, this is different. Here, the data is transferred by a generic mechanism for various purposes, such as analytics, machine learning, or simply synchronizing two data stores in a replication scenario. When sales orders are replicated into a data warehouse or into a data lake, it doesn’t trigger any business process steps, such as sales and delivery activities.

We’ll discuss key infrastructures for data integration in this blog post: CDS-based data extraction, the Data Replication Framework (DRF), and SAP Master Data Integration.

CDS-Based Data Extraction

Although several technologies are available for data integration, SAP positions CDS-based data extraction as the strategic option. This section gives an overview of CDS-based data extraction in SAP S/4HANA, which works very well in on-premise and cloud environments. It’s used, for example, for feeding data from SAP S/4HANA into SAP Business Warehouse (SAP BW) and into SAP Data Intelligence. CDS-based data extraction is a nice example of how CDS views and the VDM are used throughout the SAP S/4HANA architecture. CDS extraction views provide a general data extraction model, which is made available through several channels for the different data integration scenarios.

CDS-Based Extraction Overview

CDS-based data extraction is implemented based on CDS views that are marked as extraction views with special CDS annotations (@Analytics.dataExtraction.enabled). These extraction views are typically basic views or composite views in the VDM. During extraction, the database views generated from the CDS extraction views are used to read the data to be extracted.

Extraction can be done in two ways: Full extraction extracts all available data from the extraction views. Delta extraction means that after a full extraction, only changes are extracted from then on. This requires that the CDS extraction view is marked as delta-enabled (@Analytics.dataExtraction.delta.*).

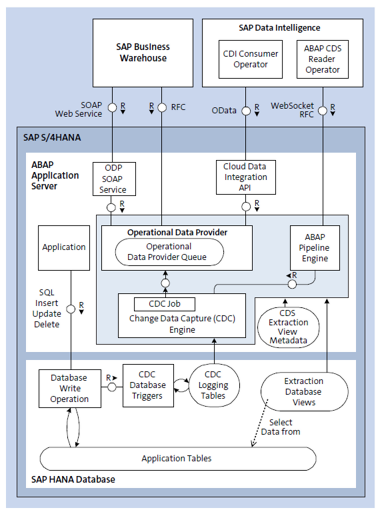

The figure below shows the architecture of CDS-based data extraction for data integration with SAP BW and with SAP Data Intelligence. There are several APIs through which external systems can extract data based on CDS views:

- The operational data provider (ODP) framework is part of the infrastructure for analytics and for extraction of operational data from SAP business systems such as SAP S/4HANA. Among other functions, it supports data extraction with corresponding APIs and extraction queues. In an on-premise scenario, systems such as SAP BW can call an RFC provided by the ODP framework in SAP S/4HANA. In SAP S/4HANA Cloud, the same API can be used via a SOAP service, which basically wraps the RFC.

- CDS-based data extraction is also possible through the Cloud Data Integration (CDI) API. CDI provides a data-extraction API based on open standards and supports data extraction via OData services. As shown, this option is also built on top of the ODP framework. The CDI consumer operator in SAP Data Intelligence can be used to extract data from SAP S/4HANA through the CDI OData API.

- The ABAP CDS reader operator is an alternative option for extracting data into SAP Data Intelligence. It uses an RFC via WebSocket to extract data from the ABAP pipeline engine in the SAP S/4HANA system. The ABAP pipeline engine can execute ABAP operators used in data pipelines in SAP Data Intelligence, such as the ABAP CDS reader operator.

For full extraction, the ODP framework and ABAP pipeline engine read all currently available data records from the extraction views.

Delta Extraction with the Change Data Capture Framework

For delta extraction, the architecture depicted above uses trigger-based change data capture, a concept known from SAP Landscape Transformation. In SAP S/4HANA, this is implemented by the change data capture engine. For trigger-based delta extraction, database triggers are created for the database tables that underlie the extraction views. Whenever records in these tables are created, deleted, or updated, corresponding change information is written to change data capture logging tables. As mentioned earlier, the CDS extraction views must be annotated accordingly to indicate that they support this option. For complex views involving several tables, the view developer must also provide information about the mapping between views and tables as part of the definition of the CDS extraction view. For simple cases, the system can do the mapping automatically. Delta extraction with the change data capture engine has some limitations, which require that the views aren’t too complex. Delta-enabled extraction views must not contain aggregates or too many joins, for example.

Change data capture for CDS-based delta extraction works as follows: A change data capture job is executed at regular times to extract changed data from application tables. For extraction, the change data capture engine uses the logging tables to determine what was changed and the database views generated from the CDS extraction views to read the data. The change data capture job writes the extracted data to the ODP queue of the ODP framework, from which it can be fetched via APIs as described previously.

As already explained, the Cloud Data Integration API is built on top of the ODP framework and therefore uses the same mechanism for delta extraction. Delta extraction is also supported by the ABAP pipeline. For delta extraction, it calls the change data capture engine, which then reads the data based on logging tables and the database views generated from the CDS extraction views.

Before the introduction of the trigger-based change data capture method, delta extraction was available only based on timestamps. This requires a timestamp field in the data model to detect what has changed from a given point in time. With trigger-based change data capture, this restriction on the data model isn’t needed. In addition, timestamp-based delta extraction requires more efforts at runtime, especially for detecting deletions. CDS-based delta extraction with timestamps is implemented by the OPD framework. It’s still available, but SAP recommends using trigger-based change data capture for new developments.

Data Replication Framework

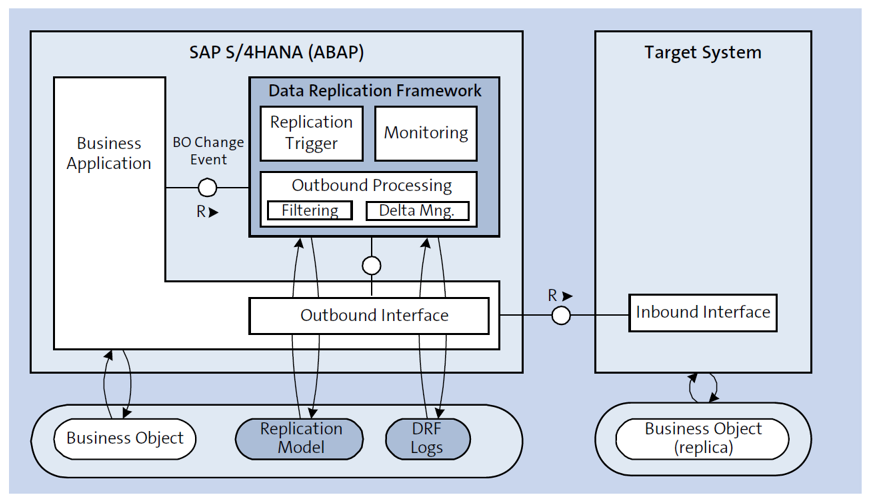

The Data Replication Framework (DRF) is a local business object change event processor that decides which business object instances will be replicated to defined target systems. It’s implemented in ABAP and part of the SAP S/4HANA software stack. Its architecture is shown in the next figure. SAP S/4HANA business applications can register local change events of their business objects at the DRF and connect corresponding outbound interfaces of their business objects. In a productive SAP S/4HANA landscape, integration experts can define a replication model that defines, for a given business object, the filter conditions and target systems for replication.

At runtime, the application informs the DRF about a business object create or change event, such as the creation of a sales order. The DRF checks the filter conditions for sales orders. If the result is that this object will be replicated, the DRF sends the business object instance via the given outbound interface—for example, a SOAP service—to the defined target system(s).

The DRF always sends complete business object instances. The integration follows the push mechanism. Business object change events initiate the downstream transfer of the business object instances to the defined target systems. The DRF is complemented by the key mapping framework and the value mapping framework to support non-harmonized identifiers and code lists.

SAP S/4HANA also uses the DRF as an event trigger for master data distribution with SAP Master Data Integration, which we’ll discuss in the next section.

SAP Master Data Integration

Cloud architecture and operation models rely on distributed business services. A prerequisite for this is the exchange and synchronization of common master data objects among these business services. SAP Master Data Integration is a multitenant cloud service for master data integration that provides a consistent view of master data across a hybrid landscape. Technically, SAP Master Data Integration is a generic master data distribution engine. Business applications and services create and store master data in their local persistence. They use the master data integration service to distribute the master data objects and ongoing updates. The master data integration service doesn’t process application-specific logic, but it performs some basic validations of the incoming data, for example, schema validations. SAP Master Data Integration uses master data models, which follow SAP One Domain Model, SAP’s framework for aligned data models that are shared across applications.

When creating or changing master data, the business application calls the change request API of SAP Master Data Integration. SAP Master Data Integration validates the incoming data and writes all accepted changes to a log. Applications interested in this master data object use the log API to read the change events. They can set filters to influence what master data they want to get. Filtering can be done on the instance level (to specify which master data records they want) and even on the level of fields to be included.

SAP S/4HANA uses SAP Master Data Integration in several end-to-end processes to exchange various types of master with other SAP applications. In the source-to-pay end-to-end process, for example, SAP Master Data Integration is used to share supplier master data and cost center master data from SAP S/4HANA Cloud with SAP Ariba. Another example is the integration between SAP S/4HANA and SAP SuccessFactors Employee Central. Here, SAP Master Data Integration is used to exchange master data such as workforce person, job classifications, and organizational units coming from SAP SuccessFactors Employee Central, and cost center master data coming from SAP S/4HANA. Many more types of SAP S/4HANA master data can be exchanged through SAP Master Data Integration, for example, bank master data, public sector management data, project controlling object, and business partner. Enabling further master data objects for exchange through SAP Master Data Management is planned to fully support the intelligent enterprise. You can explore planned integrations with SAP Road Map Explorer at https://roadmaps.sap.com/board, for example, with filters set to product “SAP S/4HANA Cloud” and focus topic “integration”.

Editor’s note: This post has been adapted from a section of the book SAP S/4HANA Architecture by Thomas Saueressig, Tobias Stein, Jochen Boeder, and Wolfram Kleis.

Comments